A research paper from earlier this year shows some disturbing results with respect to artificial intelligence’s potential for use in the Internet of Things. It can easily confuse objects when they are viewed at different angles or while moving. Of course the most frightening aspect would come when it’s used in autonomous cars or drones.

Auburn University Research

For some time artificial intelligence has carried along with it a certain wow factor, and it’s been thought for some time that decisions would ultimately be better left to AI than human error. But a recent study shows that not to be true, as at least humans can identify simple objects.

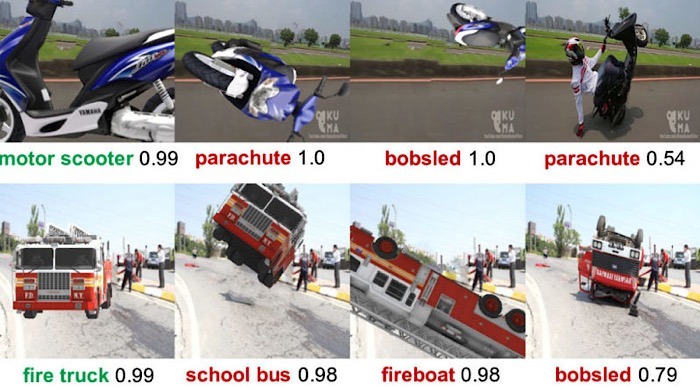

“If you start from a firetruck, you just need to rotate it a little bit, and it becomes a school bus with almost near-certain confidence,” explained Assistant Professor of Computer Science Anh Nguyen of Auburn University, a researcher who participated in the study.

The research team released a study titled, “Strike (with) a Pose: Neural Networks Are Easily Fooled by Strange Poses of Familiar Objects,” and collected the data of 3D objects from ImageNet.

They rotated the objects, then tested how the image was classified by a deep neural network. When the positions of the objects were just slightly altered, they were misclassified 97 percent of the time.

That extreme failure rate could have disastrous consequences, as the researchers pointed out, using self-driving cars as their example. Objects in the real world appear on roads in an “infinite variety of poses,” so autonomous cars will need to be able to correctly identify any object that may appear in its path to “handle the situation gracefully and minimize damage.”

Nguyen pointed out that this challenge comes into play wherever AI is used, such as with robots in the warehouse or in your home that are trying to retrieve and pick up stuff for you. Those objects could be in any orientation and could be anywhere. He also points to the use by TSA in airports and with target recognition on the battlefield.

He noted that not only is it easy for a firetruck to become recognized as a school bus, but a taxi can look like a fork lift when examined through binoculars.

No Good Solution

Nguyen was asked if there was a solution to this issue and replied, “It depends on what we want to do. If we want to, let’s say, have reliable self-driving cars, then the current solution is to add more sensors to it. And actually you rely on these sets of sensors rather than just images, so that’s the current solution.”

“If you want to solve this vision problem, just prediction based on images, then there’s no general solution. A quick and dirty hack nowadays is to add more data, and in the model world, naturally they become more and more reliable, but then it comes at a cost of a lot of data, millions of data points.”

That certainly doesn’t make it seem like there’s any good solution to this problem, and it definitely leaves a feeling of uncertainty, if not an unsettled feeling. It definitely doesn’t inspire me to jump into an autonomous car very soon.

What do you think of this study? Do you think Nguyen is right with regards to AI in autonomous cars? After all these years of testing, do you think they could really be confused so easily? Add your thoughts and concerns to the comments below.

Image Credit: Alcom et al. 2018 and Public domain