The Internet of Things, or IoT, is growing by leaps and bounds, with millions of new sensors and devices going online every month. If you feel like you’ve been hearing a lot about it recently, that’s probably because, despite a fairly long history, the Internet of Things has only just been able to truly start taking off, empowered by cheap, low-power components, widespread Internet connectivity, and a lot of interest on both the corporate and the consumer side.

We’re looking at everything from smart toasters to smart cities, from RFID tags on supply chains to medical monitoring implants, from learning thermostats to self-driving cars. Where did it all come from though? How did we go from having basically nothing connected to the Internet to having more connected devices than people on earth? While it’s far from comprehensive, the timeline below should give you a general idea of where IoT has come from and where it’s headed in the future.

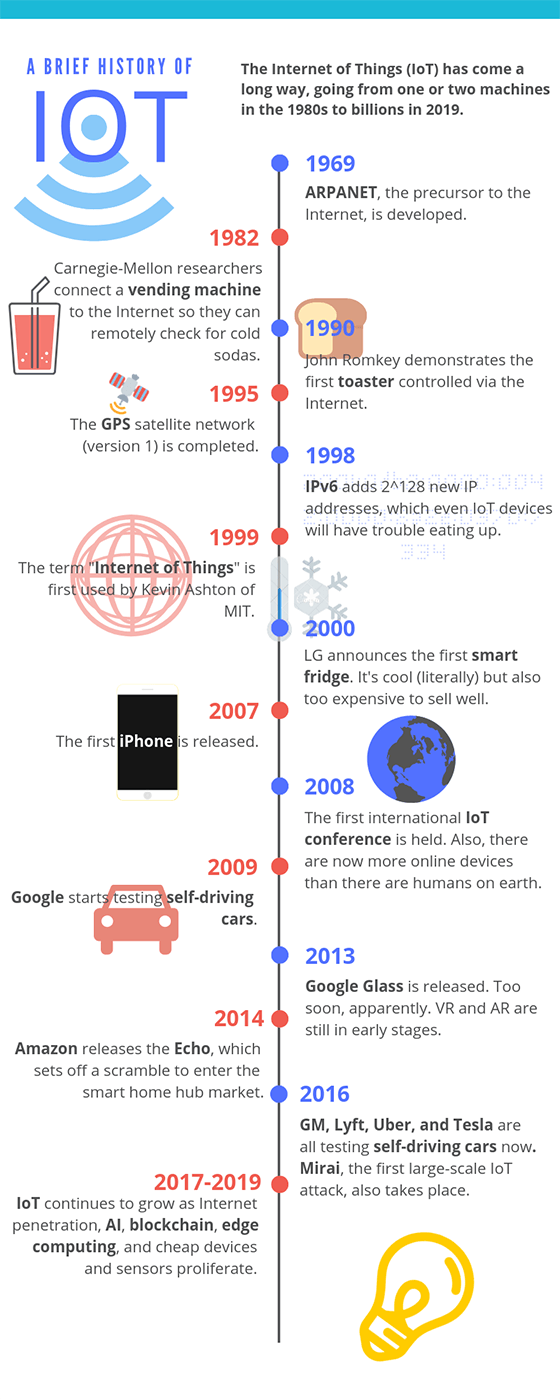

A timeline of selected major events in IoT

1969:

ARPANET, the precursor to the modern Internet, is developed and put into service by DARPA, the U.S Defense Advanced Research Projects agency. This is foundational to the “Internet” part of the Internet of Things.

1980s:

ARPANET is opened up to the public by commercial providers, making it possible for people to connect things if they want to.

1982:

Programmers at Carnegie Mellon University connect a Coca-Cola vending machine to the Internet, allowing them to check if the machine has cold sodas before going to purchase one. This is often cited as one of the first IoT devices.

1990:

John Romkey, in response to a challenge, connected a toaster to the Internet and was able to successfully turn it on and off, bringing us even closer to what we think of as modern IoT devices.

1993:

Engineers at the University of Cambridge, upholding the now well-established tradition of combining the Internet with appliances and food, develops a system that takes pictures of a coffee machine three times a minute, allowing its status to be remotely monitored by workers. World’s first webcam!

1995:

The first version of the long-running GPS satellite program run by the U.S. government is finally completed, a big step towards proving one of the most vital components for many IoT devices: location.

1998:

IPv6 becomes a draft standard, enabling more devices to connect to the internet than previously allowed by IPv4. While 32-bit IPv4 only provides enough unique identifiers for around 4.3 billion devices, 128-bit IPv6 has enough unique identifiers for up to 2^128, or 340 undecillion. (That’s 340 with 36 zeroes!)

1999:

This is a big year for IoT, since it’s when the phrase was probably first used. Kevin Ashton, the head of MIT’s Auto-ID labs, included it in a presentation to Proctor & Gamble executives as a way to illustrate the potential of RFID tracking technology.

2000:

LG announces what has become one of the quintessential IoT devices: the Internet refrigerator. It was an interesting idea, complete with screens and trackers to help you keep track of what you had in your fridge, but its $20,000+ USD price tag didn’t earn it a lot of love from consumers.

2004:

The phrase “Internet of Things” starts popping up in book titles and makes scattered media appearances.

2007:

The first iPhone appears on the scene, offering a whole new way for the general public to interact with the world and Internet-connected devices.

2008:

The first international IoT conference is held in Zurich, Switzerland. The year is fitting, since it’s also the first year that the number of Internet-connected devices grew to surpass the number of humans on earth.

2009:

Google starts self-driving car tests, and St. Jude Medical Center releases Internet-connected pacemakers. St. Jude’s device will go on to make yet more history by being the first IoT medical device to suffer a major security breach in 2016 (without casualties, fortunately). Also, Bitcoin starts operation, a precursor to blockchain technologies that are likely to be a big part of IoT.

2010:

The Chinese government names IoT as a key technology and announces that it is part of their long-term development plan. In the same year Nest releases a smart thermostat that learns your habits and adjusts your home’s temperature automatically, putting the “smart home” concept in the spotlight.

2011:

Market research firm Gartner adds IoT to their “hype cycle,” which is a graph used to measure the popularity of a technology versus its actual usefulness over time. As of 2018, IoT was just coming off of the peak of inflated expectations and may be headed for a reality check in the trough of disillusionment before ultimately hitting the plateau of productivity.

2013:

Google Glass is released – a revolutionary step in IoT and wearable technology but possibly ahead of its time. It flops pretty hard.

2014:

Amazon releases the Echo, paving the way for a rush into the smart home hub market. In other news, an Industrial IoT standards consortium form demonstrates the potential for IoT to change the way any number of manufacturing and supply chain processes work.

2016:

General Motors, Lyft, Tesla, and Uber are all testing self-driving cars. Unfortunately, the first massive IoT malware attack is also confirmed, with the Mirai botnet assaulting IoT devices with manufacturer-default logins, taking them over, and using them to DDoS popular websites.

2017-2019:

IoT development gets cheaper, easier, and more broadly-accepted, leading to small waves of innovation all over the industry. Self-driving cars continue to improve, blockchains and AI begin to be integrated into IoT platforms, and increased smartphone/broadband penetration continues to make IoT an attractive proposition for the future.

The future of IoT

If you can believe the Gartner Hype Cycle, we’re in for a readjustment of our expectations within the next few years. Long-term, though, IoT is probably just going to be the new normal. Controlling your home through your smartphone is pretty neat. Getting a real-time picture of every item in your supply chain is also pretty neat. And who doesn’t like asking Alexa stupid questions?

Technologies like AI and blockchain are increasingly being used to make our devices more independent and better-networked, and the rise of the term “edge computing” is largely driven by the realization that the proliferation of IoT devices makes the long round-trip journey to the cloud and back impractical for local users. Anything that requires mass hardware adoption takes time, though, so the shift towards an Internet of Things will be gradual. This is probably a good thing, too, since it will buy us some time to figure out the privacy and security issues that will inevitably crop up as more things go online.