Many of us fondly remember TARS, the back chatting, tactical AI robot from Interstellar (2014). Not only does it manage to save human lives on the spaceship Endurance (even inside a black hole!), it does so with humor, irony and conviction.

We already discussed the growing popularity of voice assistants in IoT consumer space. For better or worse, AI assistants like TARS are learning to understand context, show empathy and display feelings.

The question is do you relish the thought of robots doing humor, wit and sarcasm similar to human beings? Aren’t these the very qualities which make us human in the first place?

Bill Gates doesn’t agree, and he staunchly believes that AI taking human jobs is inevitable. From the context of the article, what Bill really means, though, is that AI will free human beings from the monotony of hard work. More time to play video games!

Having said this, the threat of an AI takeover of human society needs to be examined in its own merit.

AI’s Advantage Over Humans

AI robots have a leg up over humans by several orders of magnitude. It is primarily on account of their superior neural capacities.

While humans have to make do with biological neurons operating at 120~200 Hz, a modern microprocessor is a million times more capable than us. Also, these signals travel nearly at the speed of light. If you ever lost a chess game to a computer, imagine an opponent who is a million times faster.

Furthermore, AI engines in the near future will be equipped with capabilities including neural networks, machine learning, self-reliant systems and advanced microprocessors.

There are also recent developments in the semiconductor industry with regards to convoluted neural networks (CNN) and deep learning systems. One of their major applications lies in autonomous vehicles.

With a little learning, the vehicles can do many things such as predict pedestrian movements or deliver groceries. The database of intelligent networks is coming together to create a self-reliant system. It won’t be long before TARS is chauffeuring you around town in a self-driving taxi.

Almost everyone from TI, Intel, AMD and Motorola are in the fray of super-intelligent chip sets.

Teaching AI Engines to Become Smarter

Having a conversation with an AI engine is about teaching exact context as well as the following ideas.

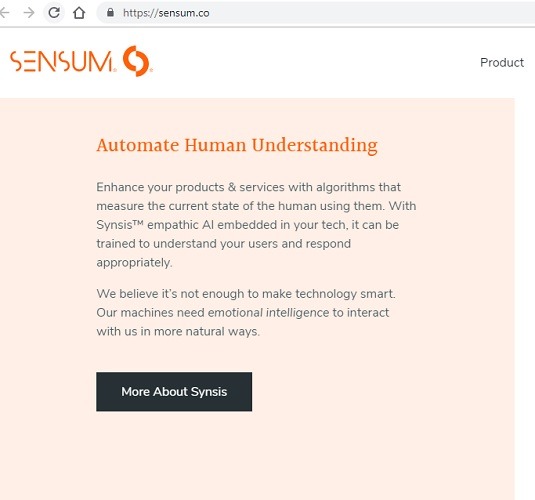

- Artificial Empathy (AE): This is a whole new stream of development in AI systems where human emotions are taught to robots. Sensum is one of the companies working in the area of embedding AI in tech.

- Teaching AI Dexterity and Learning without Supervision: AI agents can also be taught to understand social communication protocols, slang words and pick up new ideas.

- Building Routines: If you are working with a smart speaker like Alexa or Google Home, you might encounter a feature called “Routines.” Only once do you need to teach the device a routine associated with a wake word, and it completes a series of tasks.

Confronting Our Fears: Dealing with a Robot Apocalypse

As science-fiction movies portray, there are ostensible reasons to fear a mad scientist unleashing the wicked AI agents. But there are equally many valid reasons why such fears are unnecessary and do nothing but create panic.

For a successful takeover of earth, our prospective AI overlords have to learn how to start thinking about their own self-preservation. Just because they are programmed to understand “feelings” does not mean they feel anything themselves.

Robots and AI agents are designed to keep human interests in mind first. It is very different from saying that they will use their advanced intelligence to take over the planet. This is the biggest point everyone misses, though. These “beings” do not have the breath of life common to humans and animals. They are artificial programs which are made to be aware of human psyche. That doesn’t mean they feel anything for themselves even with advanced programming skills about human ideas.

Then there is the fear of the unknown. Growing up on a steady diet of sci-fi movies, video games and YouTube documentaries, our impressionable minds are vulnerable to catastrophizing the most trivial issues.

Conclusion

Whatever your feelings about AI engines, their intelligence has a positive effect on making our lives better. It is important not to impress one’s mind with scenes from Hollywood visuals while researching the AI subject. As a conclusion, AI is not going to take over human societies anytime soon.

What are your views about the so-called, impending robot apocalypse.? You are welcome to share a different perspective in the comments below.

Get the best of IoT Tech Trends delivered right to your inbox!